A cloud-based data-sharing and analysis cyberinfrastructure for neuroinformatics

Richard Stoner (Autism Center of Excellence, University of California, San Diego), Stephen Larson (Whole Brain Project, University of California, San Diego), Ilya Zaslavsky (San Diego Supercomputing Center), Sean Hill (International Neuroinformatics Coordinating Facility), Mark Ellisman (Whole Brain Project, University of California, San Diego), Eric Courchesne (Autism Center of Excellence, University of California, San Diego)

A capable cyberinfrastructure is essential to realize the potential of neuroinformatics. In the world of rapidly growing data and computational demands, the expertise to design and deploy an efficient, scalable infrastructure often exceeds the resources of an individual neuroscientist or laboratory. As a result, researchers are frequently locked out of a rich and growing set of tools coming from several informatics fields. This barrier reduces the individual abilities of the researcher as well as the potential for data sharing, reuse, and publication. To overcome this barrier, we present here a strategy, architecture design and prototype implementation of a managed data-sharing and analysis cyberinfrastructure built with tools from the INCF community on Amazon Web Service’s (AWS) elastic compute cloud (EC2).

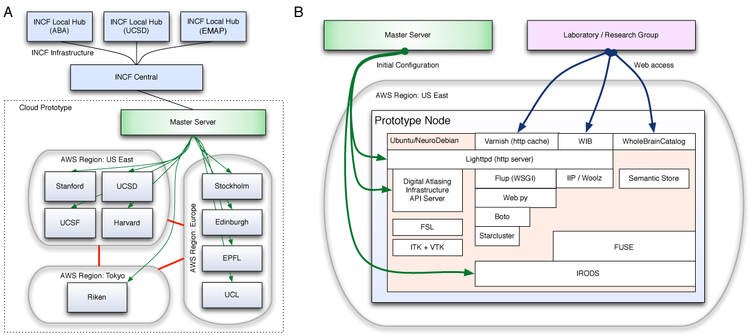

Three issues are addressed in the platform architecture: node instantiation, analysis, and federation. At the highest level of abstraction, the platform consists of a single master server and a collection of nodes instantiated by individual researchers or groups.

The master server is responsible for node instantiation, monitoring, and global user management. To facilitate rapid deployment, a web interface is provided for researchers to easily create a custom node on the AWS cloud configured for their scientific application and expected computational needs. Once launched, application logic on the master server configures the node for decentralized communication and data-sharing with other nodes within the INCF network.

Data analysis is furnished by a set of tools configured to be used as either standalone applications or as a focused pipeline defined within INCF community. All nodes share a common base, (NeuroDebian), operate on a common dataspace (iRODS+FUSE), and rely heavily on Python to link various components together. In addition to the local computation provided by the node, additional compute resources can be provisioned under the AWS framework (Starcluster) and managed with a scientific workflow engine (Kepler). Data visualization and interaction is provided from a set of web-driven tools (WholeBrainCatalog, CCDB WebImageBrowser).

Each node, upon instantiation, will become a part of the INCF network of federated hubs and expose the common web-service API described by the INCF Digital Atlasing Infrastructure. Through a web-based portal, individual labs can easily indicate what data is available for publication to the community from their node. Many large file transfer and replication issues are minimized through the use of the AWS international infrastructure, creating concurrent physical and topological proximity to the home laboratory and INCF network respectively.

Together, the components presented here create a powerful tool to enhance the research capabilities of an individual researcher while increasing the value of the data itself through analysis and federation.

Latest news for Neuroinformatics 2011

Latest news for Neuroinformatics 2011 Follow INCF on Twitter

Follow INCF on Twitter