Speeding 25 fold Neural Network Simulations with GPU Processing.

Olivier F.L. Manette (CNRS), German Hernandez (Systems and Computing, National University of Colombia, Bogota)

OpenCL (Open Computing Language) is a common standard for cross-platform parallel programming using the CPU, the GPU or both. OpenCL greatly improves speed and responsiveness for scientific and medical imaging processing software.

Here an application of openCL for a neural network simulation is presented. In this application a detailed model of morphological neuron with a dendritic tree, synapses located at different positions of the dendrite and a soma is used. For every neuron in the network, the following processes are simulated 1) the arriving of a spike to a synapse, which triggers in the dendrite an EPSP this is modeled by an alpha function, 2) the propagation of the depolarization to the soma which is simulated here using a cable model, and 3) the response of the neuron when the depolarization arrives to the soma, if the neurons threshold is reached an action potential is triggered that will depolarize other neurons of the network as a function of the connectivity, otherwise the neuron will rest.

In order to speed up simulations, several strategies had been used: 1) simplifying the neuron model or 2) create a multi-threaded version of the application and run it in a cluster of several computers or in a supercomputer.

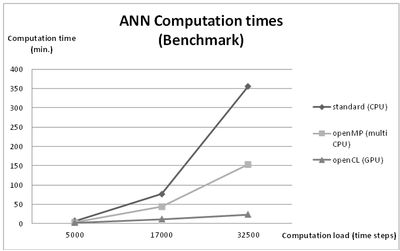

Those 2 strategies have their limits: simplifying the neuron model is sometimes not a satisfying solution because the simplified version could not replicate with enough precision the behavior of the neuron and the aim of the simulation is to understand the detailed behavior inside the neurons, of the neurons as a unit and of the network. A more suitable alternative is to create a multi-threaded (parallel) version of the simulation program and run it on a supercomputer. In this case, the limit becomes how much money you can spend in the usually very expensive supercomputer time processing. What is shown in this work is that using very inexpensive GPU processing - i.e. using the graphic card processors for calculations, in the specific case of neural networks an equivalent performance of a supercomputer can be achieved, the costs are reduced to 1/20th and the power consumption to 1/10th. In the network simulation shown here a speed up factor of 142 folds is achieved using a GPU AMD Radeon HD 5870 when compared to the same simulation on a quad-core Intel i7 950 processor.

Latest news for Neuroinformatics 2011

Latest news for Neuroinformatics 2011 Follow INCF on Twitter

Follow INCF on Twitter